Serving up python web applications has never been easier with the suite of WSGI servers currently at our disposal. Both uWSGI and gunicorn behind Nginx are excellent performers for serving up a Flask app…

Yup, what more could you ask for in life right? There are a number of varieties too, to suit one’s preference. Joking aside, this article is about configuring and stress testing a few WSGI (Web Server Gateway Interface) alternatives for serving up a Python web application. Here is what we cover in this post

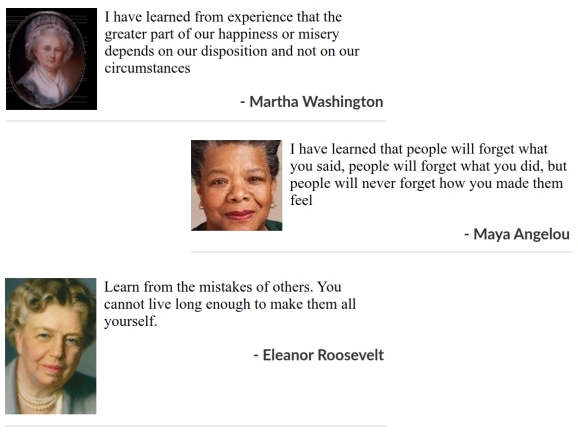

- A simple application is written with the Flask web development framework. The only API exposed is for generating a random quotation by querying a backend resource. In this case, it is Elasticsearch that has indexed a large number of quotations.

- Look at the following standalone WSGI webservers – gunicorn, uWSGI, and the default werkzeug that Flask is bundled with.

- Look at the benefit of using Nginx to front the client requests that are proxied back to the above.

- Use supervisor to manage the WSGI servers and Locust to drive the load test.

We go through some code/config snippets here for illustration, but the full code can be obtained from github.

1. WSGI Servers

Unless a web site is entirely static, the webserver needs a way to engage external applications to get some dynamic data. Over time many approaches have been implemented to make this exercise lean, efficient and easy. We had the good old CGI that spawned a new process for each request. Then came mod_python that embedded Python into the webserver, followed by FastCGI that allowed the webserver to tap into a pool of long-running processes to dispatch the request to. They all have their strengths and weaknesses. See the discussion and links on this stackoverflow page for example.

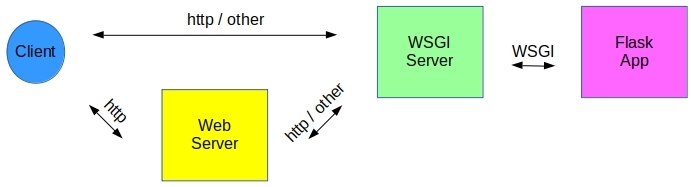

The current favorite is the WSGI protocol that allows for a complete decoupling of webservers and the applications they need to access. Here is a general schematic.

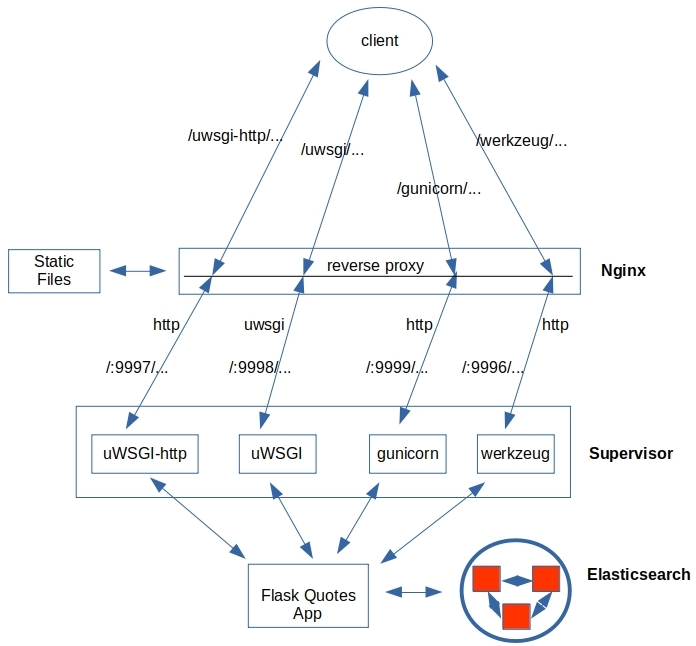

- The WSGI servers are Http enabled on their own, so the client/Nginx can talk to them via Http. In the case of the uWSGI server, there is the option of uwsgi protocol as well for Nginx, and uwsgi_curl to test from command line.

- Nginx proxies the request back to a WSGI server configured for that URI.

- The WSGI server is configured with the Python application to call with the request. The results are relayed all the way back.

2. Application

The application is simple. The application is all of just one file – quotes.py. It allows for a single GET request.

|

1 |

/quotes/byId?id=INTEGER_NUMBER |

The app fetches the quotation document from an Elasticsearch index with that INTEGER_NUMBER as the document ID, and renders them as follows.

The images and CSS are served by Nginx when available.

|

1 2 3 4 5 6 7 8 9 10 |

from flask import Flask, request, render_template, logging from elasticsearch import Elasticsearch client = Elasticsearch([{'host':'localhost','port':9200}]) app = Flask(__name__) @app.route('/quotes/byId', methods=['GET']) def getById(): docId = request.args.get('id') quote = client.get(index=index, id=docId) return render_template('quote.html',quote=quote) |

In the absence of Nginx, they are sent from the static folder.

|

1 2 3 4 5 6 |

@app.route('/css/<path:path>') def css(path): return send_from_directory(app.static_folder + '/css/', path, mimetype='text/css') @app.route('/images/<path:path>') def image(path): return send_from_directory(app.static_folder + '/images/', path, mimetype='image/jpg') |

That is the entirety of the application. Identical no matter which WSGI server we choose to use. Here is the directory & file layout.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

. ├── config.py # Config options for gunicorn ├── quotes.py ├── static │ ├── css │ │ └── quote.css │ ├── favicon.ico │ └── images │ ├── eleanor-roosevelt.jpg │ ├── martha washington.jpg │ └── maya angelou.jpg ├── templates │ └── quote.html └── wsgi.py # Used by uWSGI |

When using the built-in werkzeug as the WSGI server, we supply the runtime config in quotes.py module. Config for uWSGI and gunicorn is supplied at the time of invoking the service. We will cover that next along with that for Nginx, and for the service manager supervisord.

3. Configuration

The number of concurrent processes/workers in use by any of the WSGI servers has an impact on performance. The recommended value is about twice the number of cores but can be larger if it does not degrade the performance. We do not mess with threads per worker here as the memory footprint of our application is small. See this post for some discussion on the use of workers vs threads.

We start with 6 workers and vary it to gauge the impact. We use the same number for both gunicorn and uWSGI servers so the comparison is apples to apples. Unfortunately, there does not seem to be a way to do the same with the werkzueg server.

3.1 Supervisor

We use supervisord to manage the WSGI server processes. This allows for easier configuration, control, a clean separation of logs by app/wsgi and a UI to boot. The configuration file for each server is placed at /etc/supervisor/conf.d, and the supervisord service is started up.

|

1 2 3 4 |

[/etc/supervisor] ls conf.d/* conf.d/gunicorn.conf conf.d/uwsgi.conf conf.d/uwsgi-http.conf conf.d/werkzeug.conf [/etc/supervisor] sudo systemctl start supervisor.service |

Here is a screenshot of UI (by default at localhost:9001) that shows the running WSGI servers, and controls to stop/start, tail the logs and such.

The difference between uwsgi and uwsgi-http is that the latter has a Http endpoint while the former works with the binary uwsgi protocol. We talked about this in the context of Figure 1. Let us look at the configuration files for each. Note that the paths in the config files below are placeholders with ‘…’ to be replaced appropriately as per the exact path on the disk.

3.2 gunicorn

The command field in the config invokes gunicorn. The gunicorn server works with the app object in quotes.py and makes the web api available at port 9999. Here is the config file /etc/conf.d/gunicorn.conf

|

1 2 3 4 5 6 |

[program:gunicorn] command=.../virtualenvs/.../bin/gunicorn -c .../quoteserver/config.py -b 0.0.0.0:9999 quotes:app directory=.../quoteserver autostart=true redirect_stderr=true stdout_logfile=/var/log/supervisor/gunicorn.log |

A separate file config.py is used to supply the number of threads, logging details and such.

|

1 2 3 4 5 6 7 |

workers = 6 threads = 1 worker_class = 'sync' loglevel = 'ERROR' error_log = '-' # means stdout access_log = '-' # means stdout access_log_format = '%(h)s %(l)s %(u)s %(t)s "%(r)s" %(s)s %(b)s "%(f)s" "%(a)s"' |

3.3 uWSGI

The uWSGI server can offer either a Http or a uwsgi endpoint as we mentioned earlier. Using the uwsgi endpoint is recommended when the uWSGI server is behind a webserver like Nginx. The configuration below is for the Http endpoint. For the uwsgi endpoint, we replace “–http 127.0.0.1:9997” with “–socket 127.0.0.1:9998“

|

1 2 3 4 5 6 |

[program:uwsgi-http] command=.../virtualenvs/.../bin/uwsgi --chdir .../quoteserver --wsgi-file wsgi.py --http 127.0.0.1:9997 --processes 6 --threads 1 --master --disable-logging --log-4xx --log-5xx directory=.../quoteserver autostart=true stdout_logfile=/var/log/supervisor/uwsgi-http.log redirect_stderr=true |

The config is similar to that for gunicorn, but we do not use a separate config file. The key difference is the argument ‘–wsgi-file’ that points to the module with application object to be used by uWSGI server.

|

1 2 3 4 5 6 |

[uwsgi] more wsgi.py from quotes import app as application if __name__ == "__main__": application.run() |

3.4 werkzeug

The options for the default werkzeug server are given as part of the app.run (…) call in quotes.py module. We disable logging in order to not impact performance numbers.

|

1 2 3 4 5 6 |

# in quotes.py if __name__ == "__main__": app.logger.disabled = True log = logging.getLogger('werkzeug') log.disabled = True app.run(host='localhost', port=9996, debug=False, threaded=True) |

The only thing for left for supervisord to do is to make it run as a daemon.

|

1 2 3 4 5 6 |

[program:werkzeug] command=.../virtualenvs/.../bin/python .../quoteserver/quotes.py directory=.../quoteserver autostart=true redirect_stderr=true stdout_logfile=/var/log/supervisor/werkzeug.log |

3.5 Nginx

When Nginx is used, we need it to correctly route the requests to the above WSGI servers. We use the URI signature to decide which WSGI server should be contacted. Here is the relevant configuration from nginx.conf.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

location /werkzeug/ { proxy_pass http://127.0.0.1:9996/; } location /uwsgi-http/ { proxy_pass http://127.0.0.1:9997/; } location /uwsgi/ { rewrite ^/uwsgi/(.*) /$1 break; include uwsgi_params; uwsgi_pass 127.0.0.1:9998; } location /gunicorn/ { proxy_pass http://127.0.0.1:9999/; } |

We identify the WSGI server by the leading part of the URI and take care to proxy it back to its correct port we defined that server to be listening on.

3.5 Summary

With all this under the belt here is a summary diagram with the flow of calls from the client to backend when Nginx is in place.

In the absence of Nginx, the client sends requests directly to the Http endpoints enabled by the WSGI servers. Clear enough – no need for another diagram.

4. Load testing with Locust

Locust is a load testing framework for Python. The tests are conveniently defined in code and the stats collected as csv files. Here is a simple script that engages Locust while also collecting system metrics at the same time. We use cmonitor_collector for gathering load, and memory usage metrics.

|

1 2 3 4 5 6 7 |

#!/bin/bash /usr/bin/cmonitor_collector --sampling-interval=3 --output-filename=./system_metrics.json # start gathering memory/cpu usage sats sleep 10 pipenv run locust -f ./load_tests.py --host localhost --csv ./results -c 500 -r 10 -t 60m --no-web sleep 10 pid=$(ps -ef | grep 'cmonitor_collector' | grep -v 'grep' | awk '{ print $2 }') kill -15 $pid # stop the monitor |

- Start a system monitor to collect the load, memory usage, etc… stats

- Run the tests described in load_tests.py on the localhost

- Save the results to files ‘results_stats.csv’ and ‘results_stats_history.csv’.

- A total of 500 users are simulated with 10 users/second added as the test starts

- The test runs for 60 minutes

- Locust enables a UI as well (localhost:5557) with plots and such but not used here

- Stop the system monitor

- Post-process the csv data from Locust, and the system metrics data to generate graphics that can be compared across the different WSGI alternatives

The only test we have to define is hitting the single API that we have exposed – …/quotes/byId?id=xxxx

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

from locust import HttpLocust, TaskSet, task, between import random, sys, json # We have 7 ways of engaging the API via Http url = "http://localhost/gunicorn/quotes" # for nginx + gunicorn #url = "http://localhost/uwsgi/quotes" # for nginx + uwsgi #url = "http://localhost/uwsgi-http/quotes" # for nginx + uwsgi-http #url = "http://localhost/werkzeug/quotes" # for nginx + werkzeug #url = "http://localhost:9999/quotes" # for direct gunicorn #url = "http://localhost:9997/quotes" # for direct uwsgi-http #url = "http://localhost:9996/uwsgi/quotes" # for direct werkzeug class UserBehaviour(TaskSet): @task(1) def getAQuote (self): quoteId = random.randint(1,900000) self.client.get(url + "/byId?id="+str(quoteId)) class WebsiteUser(HttpLocust): task_set = UserBehaviour wait_time = between(1, 3) # wait between 1 - 3 seconds |

The code simulates a user that waits between 1 and 3 seconds before hitting the API again with a random integer as the ID of the quote to fetch.

5. Results

Finally time for some results. Took a while to get here for sure, but we have quite a few moving pieces. Plotting the collected data is straightforward (I used matplotlib here) so we will skip the code for that. You can get plots.py from github.

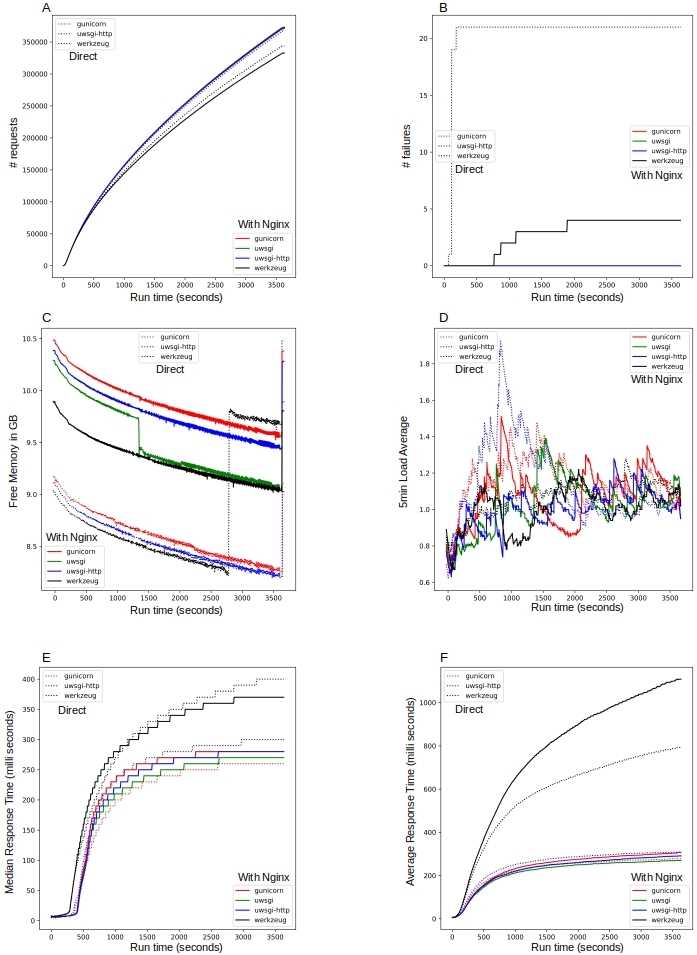

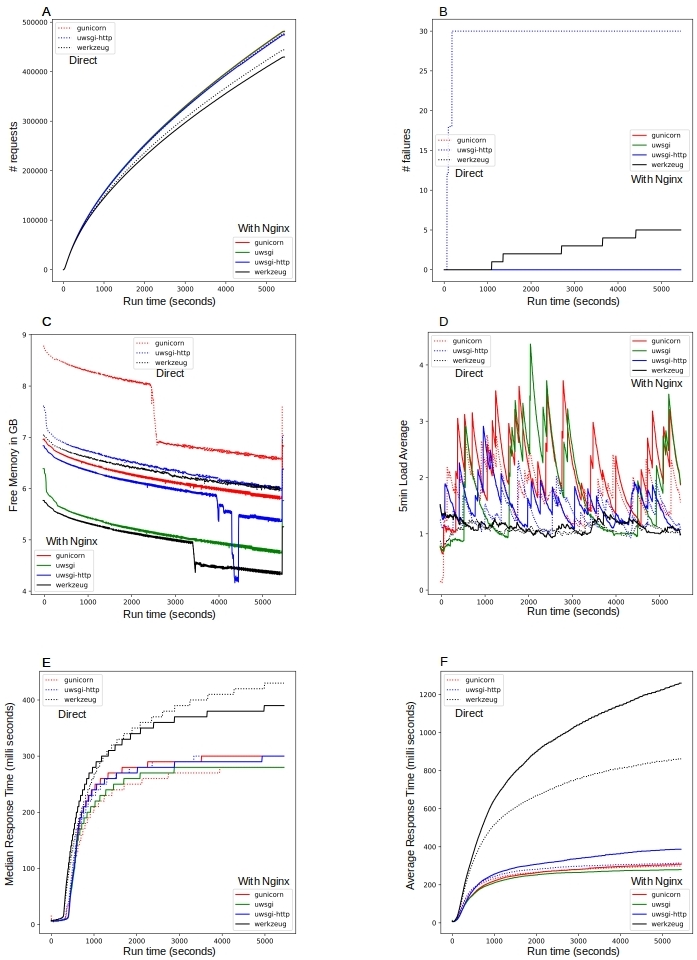

We have two series of runs – (a) with 6 workers, and (b) with 60 workers for WSGI. Each series has 7 locust runs as shown in the code snippet above. Locust generates data for a variety of metrics – the number of requests, failures, response times, etc… as a function of time. Likewise, cmonitor collects data on the load, memory usage etc… of the hardware. Figure 5 below shows the results with workers.

The main conclusions of interest from Figure 5 (E & F) are the following.

- Performance: We consider the average response time (Figure 5F). The performance of the uWSGI and gunicorn servers is comparable with/without Nginx. The default server werkzeug that Flask comes with is the worst, one of the reasons for their recommendation – do NOT use it in production. Also if you like uWSGI, go for the binary uwsgi protocol and put it behind Nginx, as it is the best. Here is the explicit order.

- uWSGI server (uwsgi) behind Nginx

- gunicorn server without Nginx

- uWSGI server (Http) behind Nginx

- gunicorn server behind Nginx

- uWSGI server (Http) without Nginx

- werkzeug, with/without Nginx

- Why is the response time increasing? The reason is NOT because the server performance is degrading with time. Rather it is the starbucks phenomena for early morning coffee, at work! What Locust is reporting here is the total elapsed time between when the request is fired and the response is received. The rate at which we are firing requests is bigger than the rate at which the server is clearing them. So the requests get queued, and the line gets longer and longer with time. The requests that get in the line early have a smaller wait time than the ones that join the line later. This, of course, manifests as the longer response time for later requests.

- Why the step increase for median and smooth for the average? The median (or any percentile) is just one integer number (milliseconds) whereas the average is, of course, the average (a float) of all the numbers. The percentile is based on the counts on either side of its current value and given the randomness – increases slowly and by quantum jumps. The average, on the other hand, increases continuously.

But there is more we can learn from here from the figures A-D.

- (A) The total number of requests increases with time – of course! Kind of linear but not quite, and there is some variation between the runs too. All that is simply because the wait_time between successive requests from the simulated users has been randomized in the code snippet above.

- (B) There are some failures but very, very few compared to the total number of requests served. Perhaps not enough to draw big conclusions.

- (C & D) There is plenty of free memory in all cases and not much load. But it does seem that when Nginx is not used, larger memory is being consumed with the server experiencing a slightly higher load.

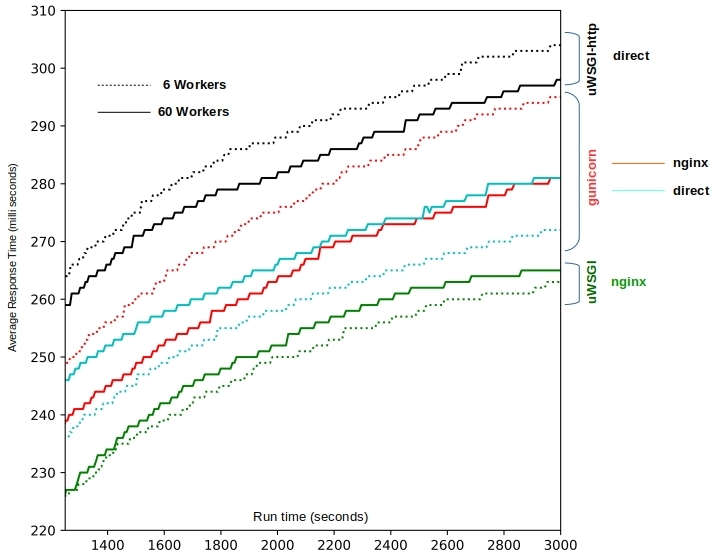

We are clearly not taxing the server with 6 workers. Let us bump up the workers to 60 and see what we get. This is in Figure 6 below.

We have clearly increased the load and the memory usage (C & D) but all of our conclusions from Figure 5 still hold with the uWSGI server as the leader, followed by gunicorn. Before closing this post, let us look at response time results with 6 and 60 workers on the same plot focusing only on uWSGI and gunicorn alone.

6. Conclusions

We have learned in this post that:

- the uWSGI server behind Nginx is the best performer

- we cannot go wrong with gunicorn either with/without Nginx

- we want to avoid placing the uWSGI Http server behind Nginx as they recommend on their website

- we do not use the default werkzeug server in production!

Happy learning!