Have Unbalanced Classes? Try Significant Terms

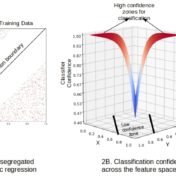

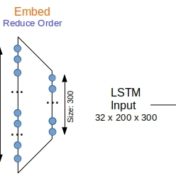

The words that are significant to a class can be used improve the precision-recall trade off in classification. Using the top significant terms as the vocabulary to drive a classifier yields improved results with a much small sized model for predicting MIMIC-III CCU readmissions from discharge notes