Semantics at Scale: BERT + Elasticsearch

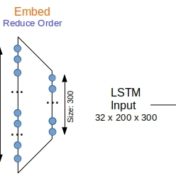

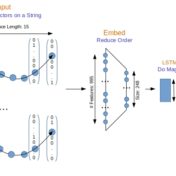

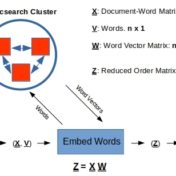

Semantic search at scale is made possible with the advent of tools like BERT, bert-as-service, and of course support for dense vector manipulations in Elasticsearch. While the degree may vary depending on the use case, the search results can certainly benefit from augmenting the keyword based results with the semantic ones…