Serving a flask application with gunicorn and nginx on docker…

Packaging applications for reproducible results across environments has gotten a great boost with docker. Docker allows us to bundle the application with all its dependencies so that the resulting image can be run anywhere with a compatible docker runtime. The applications we are interested in require resources such as:

- a web application framework (e.g. Flask)

- a WSGI server (e.g. gunicorn)

- a web server (e.g. Nginx)

- a datastore (e.g. Elasticsearch)

- an orchestration engine (e.g. Kubernetes)

Due to length concerns, the focus of this post is on 1 through 3 and dockerizing them. We will use the work here as a springboard for a Kubernetes implementation in a subsequent post that covers the whole gamut. This post goes over the following.

- Build an app container to run a Flask application behind gunicorn

- Build a web container to serve static content while proxying requests back to the app container for dynamic data

- Enable the Flask application to talk to an Elasticsearch instance on the host. You can replace this with any other data store of your choice.

The driver for this post is the earlier article A Flask Full of Whiskey (WSGI) – that was implemented natively on my laptop. Pulling together the code, system configuration, and the commands to share with the post was a chore. Plus, some of that needed to be tweaked anyway by the end-user if they were on a different OS, or had a differently configured system. A docker image with the moving parts bundled would have been easier to share, and easier to reuse! Let us get with it then.

There are some code snippets in this post for illustration. The complete code can be obtained from github.

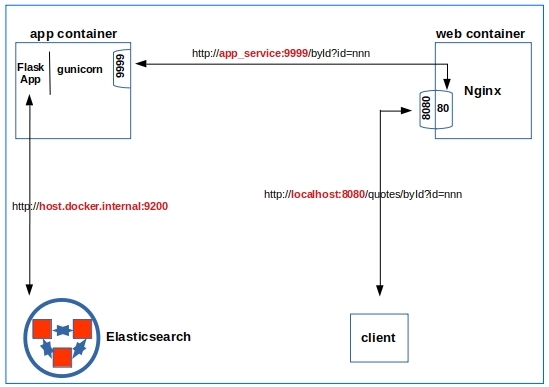

1. The scheme

Figure 1 below shows in a nutshell what we plan to implement.

We choose to leave Elasticsearch running on the host, as it serves other applications as well. Besides we want to model a situation where the container applications need to access applications on the host. The application is simple. From the id value in the API, it queries the elasticsearch index for a quote with that id and builds a snippet of Html using a template.

|

1 2 3 4 5 6 7 8 9 10 11 |

from elasticsearch import Elasticsearch from flask import Flask, request, render_template app = Flask(__name__) # 'host.docker.internal' will be resolved by the container to point to the host client = Elasticsearch([{'host':'host.docker.internal','port':9200}]) index = 'quotes' @app.route('/byId', methods=['GET']) def getById(): docId = request.args.get('id') quote = client.get(index=index, id=docId) return render_template('quote.html',quote=quote) |

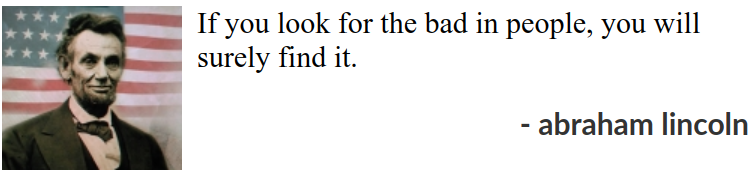

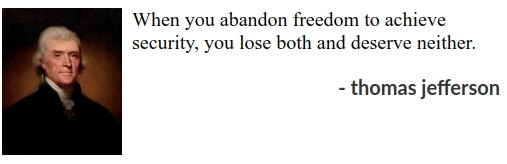

So upon hitting a URL like http://localhost:8080/quotes/byId?id=797944 you would get:

2. Docker compose

From Figure 1 we know that we need to set up two services, one of which should be accessible from the host. Here is the directory/file layout, and some detail on what each file is for.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

. ├── app │ ├── Dockerfile # instructions to build the app_container image │ ├── quoteserver │ │ ├── config.py # some config options for gunicorn │ │ ├── quotes.py # the 'app' │ │ ├── requirements.txt │ │ └── templates │ │ └── quote.html │ └── start.sh # script to be run by the container ├── docker-compose.yml └── web ├── Dockerfile ├── index.html ├── nginx.conf └── static ├── css │ └── quote.css ├── favicon.ico └── images └── abraham lincoln.jpg |

The compose file is straightforward – two services, each to be built with its own Dockerfile sitting in their respective directories as seen above. Nginx container is set to be accessible to the host at port 8080. Here is the full docker-compose.yml.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

version: '3.7' services: app_service: build: context: ./app dockerfile: Dockerfile web_service: build: context: ./web dockerfile: Dockerfile ports: - 8080:80 |

3. Web container

We import the official Nginx image and add our config and static files in the Dockerfile below for the web container

|

1 2 3 4 5 |

FROM nginx COPY ./nginx.conf /etc/nginx/ COPY index.html /var/www/html/ COPY ./static/ /var/www/html/ |

We need to send the requests to the Flask app running under gunicorn listening on port 9999 of the app container. That service is named ‘app_service’ in the docker-compose. Here is the relevant snippet from nginx.conf.

|

1 2 3 4 |

#'/quotes/byId?id=nnn' => 'http://app_service:9999/byId?id=nnn' location /quotes/ { proxy_pass http://app_service:9999/; } |

4. App container

The image for the application container starts with the lean python-alpine image and installs the required modules from requirements.txt.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# Pull a lean base image FROM python:3.8-alpine # We need the "ip" command used in 'start.sh' RUN apk add --update iproute2 COPY start.sh / RUN chmod +x /start.sh COPY ./quoteserver /quoteserver # requirements.txt lists the modules & their versions to be installed RUN pip install --upgrade pip RUN pip install -r ./quoteserver/requirements.txt # Update /etc/hosts and start gunicorn CMD ["/start.sh"] |

The flask app needs to be able to access Elasticsearch running on the host. But we do not want to hardcode a potentially variable IP address of the host. On Mac and Windows, docker resolves ‘host.docker.internal‘ to the host IP address automatically – but not on Linux unfortunately. Found this post https://dev.to/bufferings/access-host-from-a-docker-container-4099 to get around this issue. This fix is combined with the command to start gunicorn – and that is our start.sh below.

|

1 2 3 4 5 6 7 |

#!/bin/sh # Resolve 'host.docker.internal' so we can access the host HOST_DOMAIN="host.docker.internal" HOST_IP=$(ip route | awk 'NR==1 {print $3}') echo -e "$HOST_IP\t$HOST_DOMAIN" >> /etc/hosts # Start gunicorn /usr/local/bin/gunicorn -c /quoteserver/config.py -b 0.0.0.0:9999 --chdir /quoteserver quotes:app |

5. Docker network and firewall rules

One last thing I had to do on my laptop was to allow for Elasticsearch to be accessed from the docker network. The default address range in docker is quite large – 172.17.0.0/16 through 172.31.0.0/16 for example in the 172 set. Every time you run ‘docker-compose up‘, you can get containers with addresses from anywhere in that range, so we have to open up the whole range for access. Too large a range for my liking to be opened up for access to Elasticsearch in my network at home. We change this by supplying a /etc/docker/daemon.json file below with a custom smaller range.

|

1 2 3 4 5 6 |

{ "default-address-pools": [ {"base":"172.24.0.0/16","size":24} ] } |

We restart the docker service and open up 172.24.0.0/16 alone for access to Elasticsearch.

|

1 2 |

sudo systemctl restart docker.service sudo ufw allow from 172.24.0.0/16 proto tcp to any port 9200 |

6. Test and verify

We bring up the containers with docker-compose.

|

1 2 3 4 5 6 7 8 9 |

[quoteserver] ls app/ docker-compose.yml web/ [quoteserver] docker-compose build [quoteserver] docker-compose up --d [quoteserver] docker network ls NETWORK ID NAME DRIVER SCOPE f60dfeab8f95 bridge bridge local ... 90cf5e594e9d quoteserver_default bridge local |

Inspecting the quoteserver_default network confirms that our daemon.json is in effect.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[quoteserver] docker inspect 90cf5e594e9d ... "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "172.24.11.0/24", "Gateway": "172.24.11.1" } ] }, ... |

Listing the running containers shows that we have the web container exposing its port 80 at 8080 for the host.

|

1 2 3 4 5 |

[quoteserver] docker ps -a --format="table {{.Names}}\t{{.Image}}\t{{.Ports}}\t{{.Command}}" NAMES IMAGE PORTS COMMAND app_container quoteserver_app_service "/start.sh" web_container quoteserver_web_service 0.0.0.0:8080->80/tcp "nginx -g 'daemon of…" |

Examining the app container shows that the /etc/hosts has been appended with the gateway address for resolution.

|

1 2 3 4 |

[quoteserver] docker exec -it 56fe0df26e36 /bin/sh -c "tail -3 /etc/hosts" ff02::2 ip6-allrouters 172.24.11.3 app_host 172.24.11.1 host.docker.internal |

All that is left to do now is test the functionality.

|

1 |

curl http://localhost:8080/quotes/byId?id=86789 |

With that, we conclude this rather short post. We will take up the full-fledged container orchestration with Kubernetes in a subsequent post.