In the previous post Word Embeddings and Document Vectors: Part 1. Similarity we laid the groundwork for using bag-of-words based document vectors in conjunction with word embeddings (pre-trained or custom-trained) for computing document similarity, as a precursor to classification. It seemed that document+word vectors were better at picking up on similarities (or the lack) in toy documents we looked at. We carry through with it here and apply the approach against actual document repositories with known class labels – one binary & the other, multiclass. The objective of course is to see how the document+word vectors do for classification both from quality and performance points of view. After all, the whole push towards word embeddings has been initiated by the need to project the high-dimensional bag-of-words document vectors to a lower dimensional manifold where neural networks can be competitive.

Outline

The outline for the article is as follows. The code for full implementation can be downloaded from github.

- Pick two document repositories,

- The reuter 20-news from SciKit pages. This is for multiclass classification. This corpus consists of posts made to 20 news groups, so well-labeled.

- The large movie review data set from Stanford. This is for binary sentiment classication – i.e. is the movie good or bad based on the reviews.

- To make sure that we use the exactly the same document tokens and word-vectors in all the classification tests, we use Elasticsearch to hold and serve the corpus tokens (stopped/stemmed), and the word-vectors (pre-trained and custom-trained). This is done just once. We will consider Word2Vec/SGNS, and FastText algorithms for the word-vectors. Gensim API is used for generating the custom vectors and for processing the pre-trained ones.

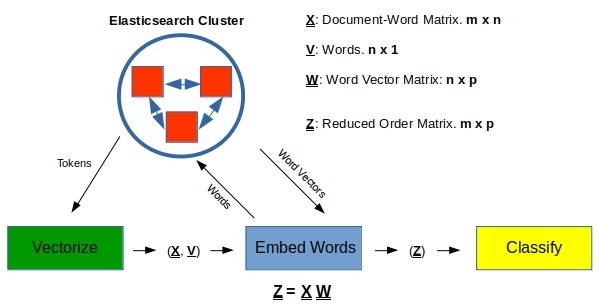

- Build a SciKit pipeline that executes the following sequence of operations shown in Figure 1.

- Get document tokens (stopped or stemmed) from an Elasticsearch index. Vectorize them (with CountVectorizer or TfidfVectorizer from SciKit) to get the high-order document-word matrix.

- Embed word-vectors (Word2Vec, FastText, pre-trained or custom) fetched from an Elasticsearch index for each token. This results in a reduced order document-word matrix.

- Run the SciKit supplied classifiers Multinomial Naive Bayes, Linear Support Vectors, and Neural Nets for training and prediction. All classifiers employ default values except for the required number of neurons and hidden layers in the case of neural nets.

The code snippets shown here are what they are – snippets, snipped from the full implementation, and edited for brevity to focus on a few things. The github repo is the reference. We will briefly detail of tokenization and word-vector generation generation steps above before getting to the full process pipeline.

The code snippets shown here are what they are – snippets, snipped from the full implementation, and edited for brevity to focus on a few things. The github repo is the reference. We will briefly detail of tokenization and word-vector generation generation steps above before getting to the full process pipeline.

1. Tokenization

While the document vectorizers in SciKit can tokenize the raw text in a document, we would like to potentially control it with custom stop words, stemming and such. Plus we want to do this only once, and simply reuse in each classification run – i.e no need for this to be a part of the pipeline. Here is a snippet of code that tokenizes the 20-news corpus saving to an elasticsearch index for future retrieval.

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

from nltk.stem.snowball import SnowballStemmer

import string

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk, streaming_bulk

from sklearn.datasets import fetch_20newsgroups

def tokenize (text);

tokens = [word.strip(string.punctuation) for word in RegexpTokenizer(r'\b[a-zA-Z][a-zA-Z0-9]{2,14}\b').tokenize(text)]

return tokens

def removeStopWords (tokens):

filteredTokens = [f.lower() for f in tokens if f and f.lower() not in nltk_stopw]

return filteredTokens

def stem (filteredTokens): # stemmed & > 2 letters

return [stemmer.stem(token) for token in filteredTokens if len(token) > 1]

def getArticle():

for i, article in enumerate(twenty_news['data']):

stopped = removeStopWords (tokenize (article))

stemmed = stem (stopped)

fileName = twenty_news['filenames'][i]

groupIndex = twenty_news['target'][i]

groupName = twenty_news['target_names'][groupIndex]

yield {

"_index": "twenty-news",

"_type": "article",

"original": article, "stopped" : stopped, "stemmed" : stemmed, "groupIndex": str(twenty_news['target'][i]), "groupName" : twenty_news['target_names'][groupIndex], "fileName" : twenty_news['filenames'][i]

}

es = Elasticsearch([{'host':'localhost','port':9200}])

nltk_stopw = stopwords.words('english')

stemmer = SnowballStemmer("english")

twenty_news = fetch_20newsgroups(subset='all', remove=('headers', 'footers', 'quotes'), shuffle=True, random_state=42)

bulk(es, getArticle())

In Line 10, we remove all punctuation, remove tokens that do not start with a letter, and those that are too long (> 14 characters) or short (< 2 characters). Tokens are lowercased, stopwords removed (line 14), and stemmed (line 18). In Line 36 we remove the headers, footers etc… info from the each post, as those would be a dead give away as to which news group the article belongs to. Basically we are making it harder to classify.

2. Word-Vectors

Our tests use both pre-trained and custom word-vectors that have been saved into an elasticsearch index for easy retrieval. The following code snippet processes the published fasttext word-vectors into an elasticsearch index.

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk, streaming_bulk

from gensim.models import KeyedVectors

es = Elasticsearch([{'host':'localhost','port':9200}])

def getWord():

filename = os.path.basename(filepath)

for word in embedModel.index2word:

wv = embedModel.get_vector(word)

yield {

"_index": "word-embeddings",

"_type": "words",

"_id" : filename + '_' + word,

"word": word,

"vector": wv.tolist()

"file": filename

}

filepath = './crawl-300d-2M-subword.vec'

isBinary = False

embedModel = KeyedVectors.load_word2vec_format(filepath, binary=isBinary)

bulk(client=es, actions=getWord(),chunk_size=100,request_timeout=120)

In line 22 we read the pre-trained vectors. Line 23 indexes them into elasticsearch. We can also generate custom word-vectors from any text corpus at hand. Gensim provides handy api for that as well.

from gensim.models import Word2Vec

from gensim.models import FastText

import numpy as np

from elasticsearch import Elasticsearch

from elasticsearch.helpers import bulk, streaming_bulk, scan

es = Elasticsearch([{'host':'localhost','port':9200}])

def getWord():

for word in embedModel.wv.index2word:

vector = embedModel.wv.get_vector(word)

yield {

"_index": "twenty-vectors",

"_type": "words",

"_id" : filename + '_' + word + '_' + tokenType,

"tokenType": tokenType,

"word": word,

"vector": vector.tolist(),

"file": filename

}

def getTokens():

listOfArticles = []

query = { "query": { "match_all" : {} }, "_source" : [tokenType]}

hits = scan (es, query=query, index="twenty-news", doc_type="article")

for hit in hits:

listOfArticles.append(hit['_source'][tokenType])

return listOfArticles

min_count = 2

# word2vec / SGNS

filename = 'twenty_news_word2vec_sgns_model'

for tokenType in ['stemmed', 'stopped']:

embedModel = Word2Vec(getTokens(), size=300, sg=1, min_count=min_count, window=5, negative=5)

bulk(client=es, actions=getWord(),chunk_size=50,request_timeout=120)

# fasttText

filename = 'twenty_news_fasttext_model'

for tokenType in ['stemmed', 'stopped']:

embedModel = FastText(getTokens(), size=300, sg=1, min_count=min_count, window=5, negative=5)

bulk(client=es, actions=getWord(),chunk_size=50,request_timeout=120)

In lines 35 and 41 the models are trained with the tokens (stopped or stemmed) obtained from the corpus index we created in section 1. The chosen length for the vectors is 300. The min_count in line 30 refers to the minimum number of times a token has to occur in the corpus, for that token to be considered.

3. Process Pipeline

We vectorize the documents in the repo, transform and reduce the order of the model if word embeddings are to be employed, and apply a classifier for fitting and prediction as shown in Figure 1 earlier. Let us look at each one of them in turn.

3.1 Vectorize

We said earlier that we could use SciKit’s count/tf-idf vectorizers. They yield a document-term matrix X for sure, but our word-embedding step in the pipeline needs the vocabulary/words obtained by that vectorizer. So we write a custom wrapper class around SciKit’s vectorizer and augment the transform response with the vocabulary.

vectorizers = [ 'counts', ("vectorizer", VectorizerWrapper(model=CountVectorizer(analyzer=lambda x: x, min_df=min_df)))), ('tfidf', ("vectorizer", VectorizerWrapper(model=TfidfVectorizer(analyzer=lambda x: x, min_df=min_df)))) ]

class VectorizerWrapper (TransformerMixin, BaseEstimator):

def __init__(self, model):

self.model = model

def fit (self, *args):

self.model.fit (args[0], args[1])

return self

def transform (self, *args):

return {'sparseX': self.model.transform(args[0]), 'vocab': self.model.vocabulary_}

The wrapper is initialized in line 1 the actual SciKit vectorizer along with min_df (the minimum frequency across the repository required for a token to be considered in the vocabulary) set to 2. Line 8 uses the fit procedure of the chosen vectorizer, and the transform method in Line 12 issues out a response with both X and the derived vocabulary V that the second step needs.

3.2 Embed Words

We have m documents and n unique words among them. The core part of the work here is the following.

- Obtain the p-dimensional word-vector for each of these n words from the index we prepared in Section 2.

- Prepare an nxp word-vector matrix W where each row corresponds to a word in the sorted vocabulary

- Convert the mxn original sparse document-word matrix X to an mxp dense matrix Z by simple multiplication. We have gone over this in the previous post. But note that SciKit works with documents as row-vectors, so the W here is the transpose of the same in Equation 1 in that post. Nothing complicated.

![]()

p is of course the length of the word-vector, the projection of the original 1-hot n-dimensional vector to this fake p-word-space. We should be careful with matrix multiplication however as X comes in from the vectorizer as a Compressed Sparse Row matrix, and our W is a normal matrix. A bit of index jugglery will do it. Here is a code snippet around this step in the pipeline.

transformer = ('transformer', Transform2WordVectors(wvObject = wvObject))

class Transform2WordVectors (BaseEstimator, TransformerMixin):

wvObject = None

def __init__(self, wvObject = None):

Transform2WordVectors.wvObject = wvObject

def fit (self, *args):

return self

def transform(self, *args):

sparseX = args[0]['sparseX']

if (not Transform2WordVectors.wvObject): # No transformation

return sparseX

else:

vocab = args[0]['vocab']

sortedWords = sorted(vocab, key=vocab.get) # sorting so they would be in the same order as the Doc-Term matrix

wordVectors = Transform2WordVectors.wvObject.getWordVectors (sortedWords) # nDocs_in_this_set x nWords_in_this_set

reducedMatrix = self.sparseMultiply (sparseX, wordVectors)

return reducedMatrix

def sparseMultiply (self,sparseX, wordVectors):

wvLength = len(wordVectors[0])

reducedMatrix = []

for row in sparseX:

newRow = np.zeros(wvLength)

for nonzeroLocation, value in list(zip(row.indices, row.data)):

newRow = newRow + value * wordVectors[nonzeroLocation]

reducedMatrix.append(newRow)

reducedMatrix=np.array([np.array(xi) for xi in reducedMatrix])

return reducedMatrix

Line 1 initializes the transformer with a wordvector object (check github for the code) that has the methods to get the vectors from the index. Line 15 gets a sorted word list from the vocabulary passed from the vectorizer step. The csr X matrix uses the same order for its non-zero entries and we need to obtain W in the same order of words as well. This is done in line 16, and finally the sparse matrix multiplication in line 17 yields the reduced order matrix Z that we are after.

3.3 Classify

This is easy. The classifier gets the n x p matrix Z where each row is a document. It also gets the n x 1 vector of labels when fitting the model. We will evaluate three classifiers – naive bayes, support vector machines, and neural nets. We run them without tweaking any of the default SciKit parameters. In the case of neural nets we try a few different number of hidden layers (1, 2 or 3) and neurons within (50, 100, and 200) as there are no good defaults for that.

def getNeuralNet (nHidden, neurons):

neuralNet = (neurons,)

for i in range(2, nHidden):

neuralNet = neuralNet + (neurons,)

return neuralNet

classifiers = { 'nb' : ("nb", MultinomialNB()), 'linearsvc' : ("linearsvc", LinearSVC()) }

if (model == 'mlp'):

mlpClassifiers = []

for nHidden in [1, 2, 3]:

for neurons in [50, 100, 200]:

name = str(nHidden) + '-' + str(neurons)

mlpClf = (name, MLPClassifier(hidden_layer_sizes=getNeuralNet(nHidden, neurons),verbose=False))

mlpClassifiers.append(mlpClf)

classifiers['mlp'] = mlpClassifiers

The method getNeuralNet in line 1 generates the tuple we need for initializing the neural net with the hidden layers and neurons. We prepare a suite of classifiers that are applied against the various combinations of vectorizers and transformers.

4. Simulations

To keep it simple we stick to a single training set and single test set. In case of 20-news we do a stratified split with 80% for training and 20% for test. The imdb movie review data set comes with defined train and test sets. Lines 9 and 10 in the code snippet below use a Tokens class that has methods to pull tokens from the index.

if (wordCorpus == 'twenty-news'):

testDataFraction = 0.2

sss = StratifiedShuffleSplit(n_splits=1, test_size=testDataFraction, random_state=0)

sss.get_n_splits(X, y)

for train_index, test_index in sss.split(X, y):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

elif (wordCorpus == 'acl-imdb'):

X_train, y_train, classNames = Tokens(wordCorpus).getTokens(tokenType,'train')

X_test, y_test, classNames = Tokens(wordCorpus).getTokens(tokenType,'test')

model = Pipeline(vectorizer, transformer, classifier)

model.fit(X_train, y_train)

predicted = model.predict(X_test)

The model to run is an instance of the pipeline – a specific combination of vectorizer, transformer, and classifier. Lines 13 thru 15 define the model and run the predictions.

The simulations are run via a shell script that loops over the different combinations of classifiers, tokenization schemes, and word-vectors.

#!/bin/bash

#wordCorpus="twenty-news"

wordCorpus="acl-imdb"

min_df=2

for model in mlp linearsvc nb; do

for tokenType in stopped stemmed; do

for wordVecSource in glove fasttext google custom-vectors-fasttext custom-vectors-word2vec none; do

echo "pipenv run python classify.py  min_df

min_df  tokenType

tokenType  wordCorpus

wordCorpus  model

model  wordVecSource

done

done

done

wordVecSource

done

done

done

Some combinations are not allowed however and they are skipped in the Python implementation. Those are:

- Naive bayes classifier does not allow for negative values in the document vectors. But when we use document+word vectors, Z will have some negatives. It should be possible to translate/scale all vectors uniformly to avoid negatives, but we do not bother as we have enough simulations to run anyway. So basically naive bayes classifier is used ONLY with pure document vectors here.

- The pre-trained word-vectors are only available for normal words, not stemmed ones. So we skip the runs with the combination of stemmed tokens and pre-trained vectors.

While there are 9 mlp classifier runs ( 1,2, or 3 hidden layers with 50, 100, or 200 neurons each) all the results reported here are for when 2 hidden layers are used each with 100 neurons. The other mlp classifier runs basically serve to verify that the quality of the classification was not very sensitive at this level of hidden layers and neurons.

5. Results

Th only metrics we look at are the F-scores for the quality of classification and the cpu time for efficiency. In case of multiclass classification for the 20-news data set, F-score is an average over all the 20 classes. The run time for a classifier+vectors is averaged across all the runs with that classifier and the vectors used.

5.1 Multiclass Classification of 20-News Data Set

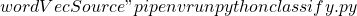

The results for the 20-news data set are summarized in Figure 2 below.

Figure 2. Nulticlass classification of the 20-news data set. (2A) Classification with pure document vectors (2B) Classification with document+word vectors (2C) Run time with pure document vectors and document+word vectors

There is a lot of detail crammed into the figure here so let us summarize point by point.

- Document Vectors vs Document+Word Vectors: A glance at 2A and 2B would tell us that the classification quality in 2A is better, not by much perhaps, but nevertheless true and across the board. That is, if classification quality is paramount then document vectors seem to have an edge in this case.

- Stopped vs Stemmed: Stemmed vocabulary yields shorter vectors, so better for performance for all classifiers. This is especially true for the mlp classifier where the number of input neurons equals the size of the incoming document vectors. When the words are stemmed, the number of unique words dropped by about 30% from 39k to 28k, a big reduction in the size of the pure document vectors.

- Document Vectors. Figure 2A shows that when pure document vectors are the basis for classification, there was no material impact on the F-scores obtained.

- Document+Word Vectors. There does seem to be some benefit however to using stemmed tokens in this case. While the improvements are small, the custom vectors obtained by training on stemmed tokens show better F-scores than the vectors trained on stopped tokens. This is shown in Figure 2B.

- Frequency Counts Vs Tf-Idf: Tf-Idf vectorization allows for differential weighting for words based on how commonly they occur in the corpus. For keyword based search schemes it helps improve the relevance of search results.

- Document Vectors. While naive bayes was not impressed by tf-idf, both the linearsvc and mlp classifiers yield better F-scores with tf-idf vectorization. This is shown in Figure 2A.

- Document+Word Vectors. Figure 2B shows that there is a good improvement in F-scores when tf-idf vectorization is used. Both with pre-trained word-vectors and custom word-vectors. The only exception seems to be when pre-trained word2vec vectors are used in conjunction with an mlp classifier. But increasing the number of hidden layers to 3 (from 2) and neurons to 200 (from 100) tf-idf vectorization again yielded a better score.

- Pre-trained Vectors Vs Custom Vectors: This applies to Figure 2B alone. Custom word-vectors seem to have an edge.

- With word2vec, the custom vectors clearly yield better F-scores especially with tf-idf vectorization

- With fasttext, the pre-trained vectors seem to be marginally better

- Timing Results: Figure 2C shows the average cpu time for the fit & predict runs.

- The much larger run time for mlp classifier when pure document vectors are used is understandable. There are many (39k if stopped and 28k if stemmed) input neurons to work with. This is one of the reasons for the emergence of word embeddings as we discussed in the previous post.

- With a smaller but dense Z, the linearsvc classifier takes longer to converge.

- Naive bayes classifier is the fastest of the lot.

5.2 Binary Classification of Movie Reviews

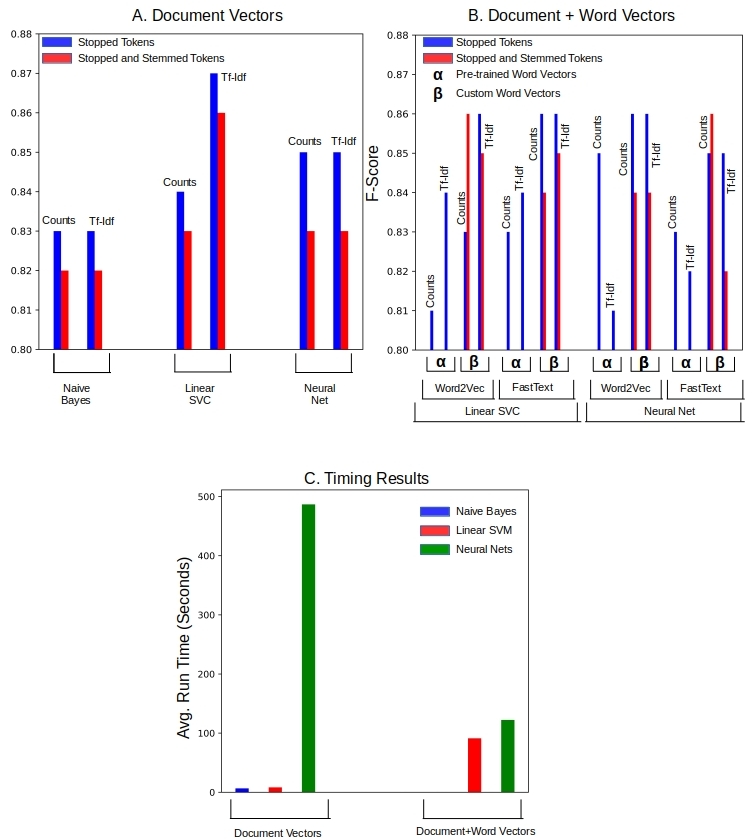

Figure 3 below shows binary classification the results obtained for the movie review data set.

Figure 3. Binary classification of the movie review data set. (3A) Classification with pure document vectors (3B) Classification with document+word vectors (3C) Run time with pure document vectors and document+word vectors

The observations here are not qualitatively different from the above so we will not spend much time on it. The overall quality of classification here is better with the F-scores being north of 0.8 (compared to a around 0.6 for the 20-news corpus). But that is just the nature of this data set.

- Document Vectors vs Document+Word Vectors: Glancing at 3A and 3B we can say that document+word vectors seem to have an edge for classification quality overall (except for the one case when linearsvc is used with tf-idf). The opposite was the case for the 20-news data set.

- Stopped vs Stemmed: Stopped tokens seem to perform better in most cases. The opposite was true with the 20-news data set. Stemming results in a 34% reduction in the size of the vocabulary from 44k to 29k. We applied the same stemmer for both the data sets, but perhaps it was too aggressive for the nature of text in this corpus.

- Frequency Counts vs Tf-Idf: Tf-Idf vectors performed better in most cases, just as they did in the 20-news data set.

- Pre-trained Vectors Vs Custom Vectors: Custom word-vectors yield better F-scores than pre-trained vectors in all cases. Kind of confirms our assessment from the 20-news data set where it was not so clear cut.

- Timing Results: Naive Bayes is still the best of the lot.

6. So What are the Big Take Aways?

We cannot unfortunately draw definite conclusions based on our somewhat shallow testing on just two data sets. Plus as we noted above, there was some variance in classification quality for the same pipeline across the data sets as well. But at a high level we can perhaps conclude the following – take them with a grain of salt!

- When in doubt – simplify. Do the following and you will not be too wrong and you will get your work done in a jiffy to boot. Use:

- Naive Bayes classifier

- Tf-idf document vectors

- Stem if you want to.

- Understand the corpus. The specific pipeline (tokenization scheme => vectorization scheme => word-embedding algorithm => classifier) that works for well one corpus may not be the best for a different corpus. Even if the general pipeline may work, the details (specific stemmer, number of hidden layers, neurons etc…) will need to be tuned to get the same performance on a different corpus.

- Word embeddings are great. For dimensionality reduction and the concurrent reduction in the run times, for sure. They work pretty well, but there is more work to do. If you have to use neural nets for document classification you should try these.

- Use custom vectors. Using custom word-vectors generated from the corpus at hand are likely to yield better quality classification results

Pingback: #StackBounty: #classification #natural-language #word-embeddings Text Embeddings on a Small Dataset – TechUtils.in