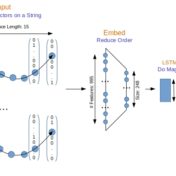

Sequence respecting approaches have an edge over bag-of-words implementations when the said sequence is material to classification. Long Short Term Memory (LSTM) neural nets with words sequences are evaluated against Naive Bayes with tf-idf vectors on a synthetic text corpus for classification effectiveness.

Tf-idf vectors with word-embeddings are analyzed for clustering effectiveness. The text corpus examples considered here indicate that custom word-embeddings can help with clustering

A sister task to classification in machine learning is clustering. While classification requires up-front labeling of training data with class information, clustering is unsupervised. There is a large benefit to unattended grouping of text on disk and we would like to know if word-embeddings can help. In fact, once identified, these… Read more »

Ok, that was in jest, my apologies! But it is a question we should ask ourselves before embarking on a clustering exercise. Clustering hinges on the notion of distance. The members of a cluster are expected to be closer to that cluster’s centroid than they are to the centroids of other clusters…. Read more »

In the previous post Word Embeddings and Document Vectors: Part 1. Similarity we laid the groundwork for using bag-of-words based document vectors in conjunction with word embeddings (pre-trained or custom-trained) for computing document similarity, as a precursor to classification. It seemed that document+word vectors were better at picking up on similarities… Read more »

Classification hinges on the notion of similarity. This similarity can be as simple as a categorical feature value such as the color or shape of the objects we are classifying, or a more complex function of all categorical and/or continuous feature values that these objects possess. Documents can be classified… Read more »

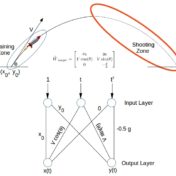

Closed-form solutions are sweet. No hand-wringing/waving required to make a point. Given the assumptions, the model predictions are exact so we can readily evaluate the impact of assumptions. And, we get the means to evaluate alternate (e.g. numerical) approaches applied to these same limiting cases with the exact solution. We are… Read more »

The curse of dimensionality is the bane of all classification problems. What is the curse of dimensionality? As the number of features (dimensions) increase linearly, the amount of training data required for classification increases exponentially. If the classification is determined by a single feature we need a-priori classification data over… Read more »

Machine learning is alchemy – researchers in artificial intelligence at Google have recently proclaimed. Any high school or college student that has ever tried to solve nonlinear systems of equations with gradient descent method knows that already, kind of… Even for a perfect bowl-shaped cost-surface, gradient descent method will converge… Read more »

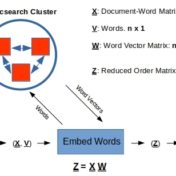

The term-document matrix is a high-order, high-fidelity model for the document-space. High-fidelity in the sense that will correctly shred-bag-tag it to represent it as a vector in term-space as per VSM. has entries, with distinct terms (rows) building documents (columns). But do we need all those values to capture this shred-bag-tag effect of … Read more »