Category Archives: Deep Learning

Attention as Adaptive Tf-Idf for Deep Learning

Attention is like tf-idf for deep learning. Both attention and tf-idf boost the importance of some words over others. But while tf-idf weight vectors are static for a set of documents, the attention weight vectors will adapt depending on the particular classification objective. Attention derives larger weights for those words that are influencing the classification objective, thus opening a window into the decision making process with in the deep learning blackbox…

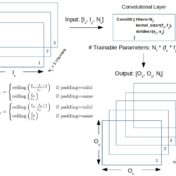

Reconciling Data Shapes and Parameter Counts in Keras

Flowing Tensors and Heaping Parameters in Deep Learning

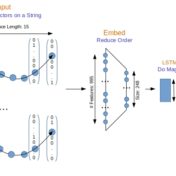

Convolution Nets For Sentiment Analysis

Multiclass Classification with Word Bags and Word Sequences

Sequence Based Text Classification with Convolution Nets

Earlier with the bag of words approach we were getting some really good text classification results. But will that hold, when we take into consideration the sequence of words? There is only one way to find out, let’s get right into the action, where we are doing a head on comparison of traditional approach (Naive Bayes) with a modern neural based one (CNN).

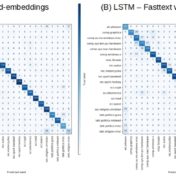

Word Bags vs Word Sequences for Text Classification

Sequence respecting approaches have an edge over bag-of-words implementations when the said sequence is material to classification. Long Short Term Memory (LSTM) neural nets with words sequences are evaluated against Naive Bayes with tf-idf vectors on a synthetic text corpus for classification effectiveness.